Leo McCormack, Archontis Politis, Thomas McKenzie, Chris Hold, and Ville Pulkki

Object-Based Rendering of Sound Scenes Captured with Multiple Ambisonic Receivers

Abstract

This article proposes a system for object-based six-degrees-of-freedom (6DoF) rendering of spatial sound scenes that are captured using a distributed arrangement of multiple Ambisonic receivers. The approach is based on first identifying and tracking the positions of sound sources within the scene, followed by the isolation of their signals through the use of beamformers. These sound objects are subsequently spatialized over the target playback setup, with respect to both the head orientation and position of the listener. The diffuse ambience of the scene is rendered separately by first spatially subtracting the source signals from the receivers located nearest to the listener position. The resultant residual Ambisonic signals are then spatialized, decorrelated, and summed together with suitable interpolation weights. The proposed system is evaluated through an in situ listening test conducted in 6DoF virtual reality, whereby real-world sound sources are compared with the auralization achieved through the proposed rendering method. The results of 15 participants suggest that in comparison to a linear interpolation-based alternative, the proposed object-based approach is perceived as being more realistic.

Paper

The open-access paper can be downloaded from here.The developed audio plug-in

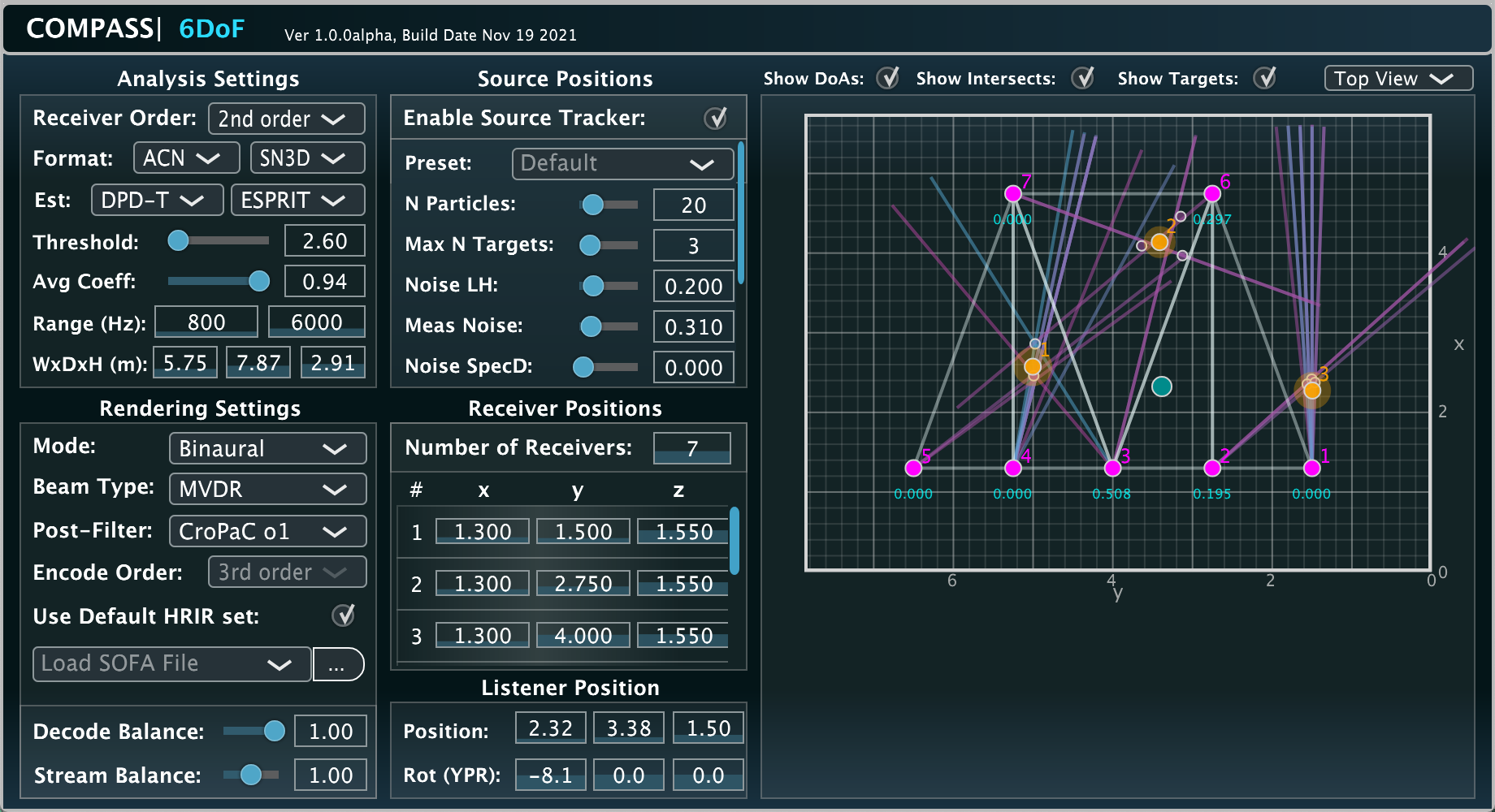

A VST audio plug-in was developed to demonstrate and evaluate the proposed 6DoF rendering method, which is installed alongside the SPARTA plug-in suite. More information regarding the developed system may be found in the paper, and also from here.

Note that the specific version of the plug-in used for the paper evaluation can be found in the SPARTA installer version 1.5.2. Additionally, a preset is included in the plug-in, which configures the plug-in with the same parameter values as used during the formal evaluations.

Listening test evaluation files

An example REAPER project my be found here, which is based on the spatial room impulse response dataset released here. The project contains all of the simulated microphone array recordings used for the evaluation described in the paper, which are being binaurally rendered by the developed VST audio-plugin. The project also serves as a demonstration of the developed system. When the tracker is set to Enabled, then the rendering is as described by the parT test case in the paper, whereas, when it is disabled, the system instead renders the audio as described by the par test case in the paper. Setting the Decode Balance to 0 instead provides what is described as the lin test case in the paper.

Note that the REAPER project includes simulated microphone array recordings for when the variable acoustics room was configured for three different acoustics, which are denoted as: 0pc, 50pc, or 100pc absorption enabled. In the paper evaluation, the 100pc absorption enabled variants were selected for the formal listening tests. However, the other configurations may also provide further informal insights by the curious reader.

|

http://research.spa.aalto.fi/publications/papers/compass_6dof/ Updated on Wednesday Dec 8, 2021 This page uses HTML5, CSS, and JavaScript |