Leo McCormack, Symeon Delikaris-Manias and Ville Pulkki

Parametric acoustic camera for real-time sound capture, analysis and tracking

Companion page for the acoustic camera paper presented in DAFx2017, Edinburgh, UK, 2017.

Abstract

This paper details a software implementation of an acoustic camera, which utilises a spherical microphone array and a spherical camera. The software builds on the Cross Pattern Coherence (CroPaC) spatial filter, which has been shown to be effective in reverberant and noisy sound field conditions. It is based on determining the cross spectrum between two coincident beamformers. The technique is exploited in this work to capture and analyse sound scenes by estimating a probability-like parameter of sounds appearing at specific locations. Current techniques that utilise conventional beamformers perform poorly in reverberant and noisy conditions, due to the side-lobes of the beams used for the power-map. In this work we propose an additional algorithm to suppress side-lobes based on the product of multiple CroPaC beams. A Virtual Studio Technology (VST) plug-in has been developed for both the transformation of the time-domain microphone signals into the spherical harmonic domain and the main acoustic camera software; both of which can be downloaded below.

Introduction

All plugins have been tested in REAPER64, which is the recommended DAW for these plug-ins.

Please note that due to the nature of the VST2 standard, each channel configuration has been compiled as a separate plug-in. Therefore, first order spherical harmonic (or ambisonic) signals should be processed by the plug-ins with the "_o1" suffix, for example.

MIC2SH plugin: Conversion of microphone array signals to spherical harmonics signals

The algorithms within the acoustic camera have been generalised to support spherical harmonic signals up to the 7th order. These signals can be optionally generated by using the accompanying Mic2SH VST, which accepts input signals from spherical microphone arrays such as A-format microphones (1st order) or the Eigenmike (up to 4th order). In the case of the Eigenmike, Mic2SH will also perform the necessary frequency-dependent equalisation, described in the paper, in order to mitigate the radial dependency incurred when estimating the pressure on a rigid sphere. Different equalisation strategies have been implemented that are common in the literature, such as the Tikhonov-based regularised inversion and soft limiting. For more details check the DAFx paper.

A new plugin for converting any microphone array signals to spherical harmonic signals is currently under development.

The MacOSX versions of the Mic2SH VSTs can be downloaded here:

- VSTs (zip, 23.8 MB)

- VSTs (zip, 28.8 MB)

AcCroPaC plugin:

Please note that the AcCroPaC plug-in supports frame sizes of 2048 samples, only. And expects a sampling rate of 48kHz.

The Acoustic camera supports spherical harmonic signals that conform to the ACN channel ordering and N3D normalisation conventions. These ACN/N3D signals can be generated using the Mic2SH plug-in if you are using an A-format microphone or an Eigenmike32.

Alternatively, if you are using a B-Format microphone, we recommend the ambix_converter plug-in found here: http://www.matthiaskronlachner.com/?p=2015. This plug-in can convert from Furse-Malham/Furse-Malham to ACN/N3D.

The MacOSX versions of the AcCroPaC VSTs can be downloaded here:

- VSTs (zip, 7.8 MB)

- VSTs (zip, 33.3 MB)

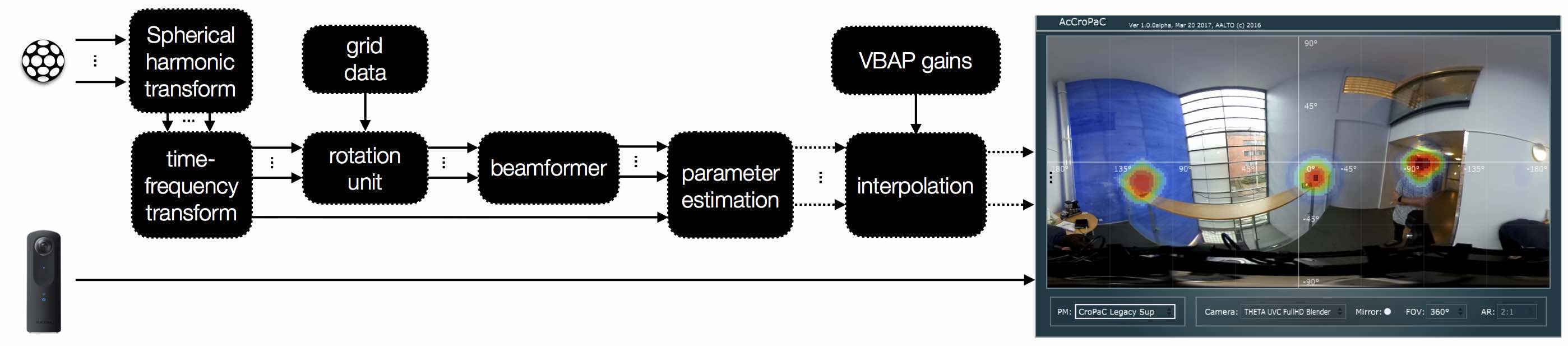

Block diagram

The time-domain microphone array signals are initially transformed into spherical harmonic signals using the Mic2SH audio plug-in, which are then transformed into the time-frequency domain by the acoustic camera. For computational efficiency reasons, the spherical harmonic signals are rotated after the time-frequency transform towards the points defined by the pre-computed spherical grid. These signals are then fed into a beamformer unit, which forms the two beams that are required to compute the cross-spectrum based parameter for each grid point. Note that when the side-lobe suppression mode is enabled, one parameter is estimated per roll and the resulting parameters are multiplied. For visualisation, the parameter value at each of the grid points is interpolated using VBAP and projected on top of the spherical video.

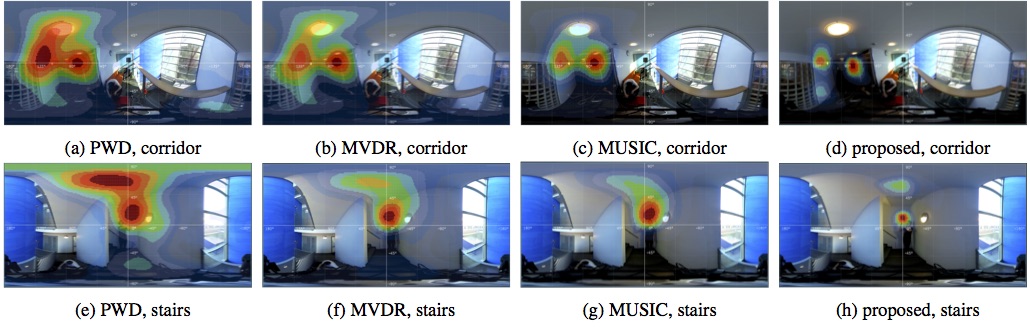

Results

An example taken from the paper. Print screens were taken of the acoustic camera GUI when utilising the PWD, MVDR, MUSIC, and the proposed (left-right) algorithms, in two reverberant environments (top-bottom).

How to cite our work

McCormack, L., Delkaris-Manias, S. and Pulkki, V., "Parametric Acoustic Camera for Real-Time Sound Capture, Analysis and Tracking," Proceedings of the 20th Internation Conference of Digital Audio Effects (DAFx-17), Edinburgh, UK, September 5-9, 2017. PDF link (7.4 MB)

Updated on Wednesday June 15, 2017

This page uses HTML5, CSS, and JavaScript